Whenever the term “AI agents” is used in the context of generative AI applications, people tend to imagine systems where large language models (LLMs) autonomously perform complex tasks from start to finish. However, most real-world applications don’t require complete, unchecked LLM autonomy. This tweet from Andrew Ng really resonated with me—specifically, where he says:

“Rather than having to choose whether or not something is an agent in a binary way, I thought, it would be more useful to think of systems as being agent-like to different degrees.”

It helps to take a more holistic approach to autonomy and instead use the term “agentic systems” to also encompass systems that lie somewhere between simply prompting an LLM and fully autonomous AI agents.

Therefore, we define agentic systems as those that use LLMs to decide the execution flow of an application with varying degrees of autonomy. This ranges from implementations where LLMs make limited decisions within a structured workflow to those where LLMs independently execute tasks with minimal human intervention. The degree of “agenticity” is defined by how much decision-making authority is delegated to the LLM.

In this article, we will cover seven practical design patterns for agentic systems that we have implemented with our customers. For each pattern, we will look at how it works, when to use it, and specific use cases.

Table of contents

Controlled flows

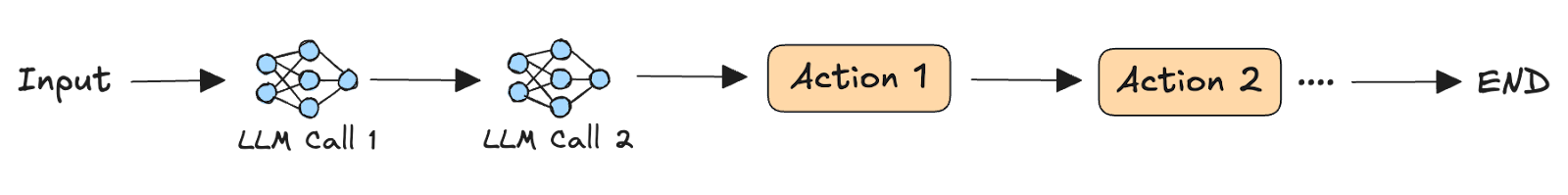

Controlled flows offer a low-risk method to integrate LLMs into your workflows. In this design pattern, LLMs actively perform tasks such as content generation and analysis within each step of the workflow, but the sequence of steps and the rules for moving between them are fixed by design. This means that the LLM has the freedom to operate within each step but cannot choose which step comes next in the process.

NOTE: Throughout this blog, multiple LLM calls in any diagram can represent calls to a single LLM with different prompts or calls to different LLMs.

When to use

This pattern is ideal in scenarios where the task can be decomposed into a well-defined sequence of subtasks, some of which can be reliably handled by LLMs. The main goal here is to retain the system’s ability to produce reliable and/or deterministic outcomes despite the usage of LLMs.

Use cases

-

Content review: Use LLMs to partially automate content reviews—rectify punctuation and grammatical errors, verify content against style guides, fact-check—then forward the document for human technical review.

-

Natural language to query: Use LLMs as part of a sequential self-querying workflow to identify the query parameters such as data sources and query filters and synthesize the final answer.

LLM as a router

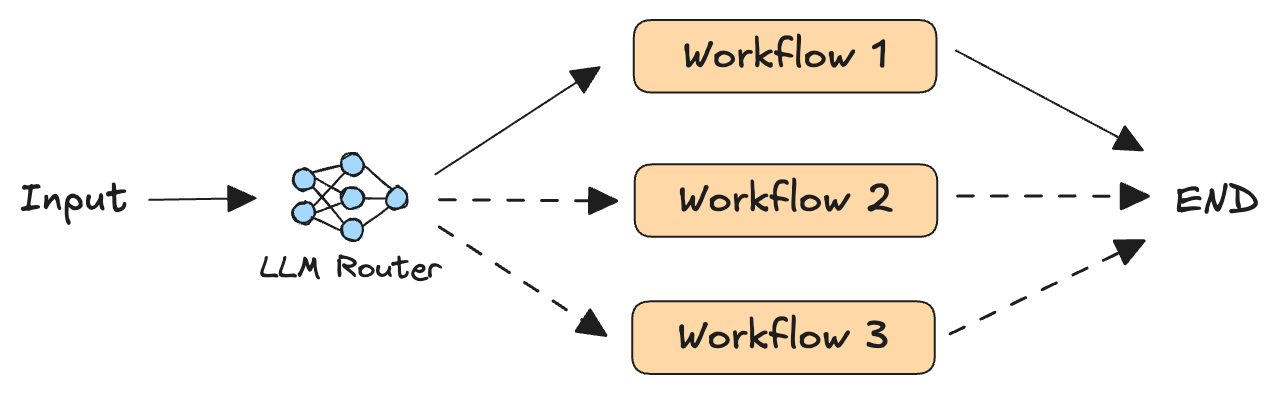

In this design pattern, queries are routed among a set number of specialized workflows based on an LLM’s categorization of the input. Think of this as a smart switch that looks at incoming information and decides, “Where should this go next?” These specialized workflows may involve the same LLMs with different prompts; a combination of small and large LLMs; domain-specific, fine-tuned models; or microservices and APIs.

When to use

This pattern works well when incoming requests span diverse topics and complexity levels, provided they can be reliably categorized based on their content. The main goal is to ensure accurate responses despite the broad scope of the application’s inputs.

Use cases

-

Customer service: Based on the nature of the request, route customer service requests to downstream processes for different departments.

-

Resource-efficient routing: Route simple questions to smaller and/or cheaper LLMs and complex questions to reasoning models.

Parallelization

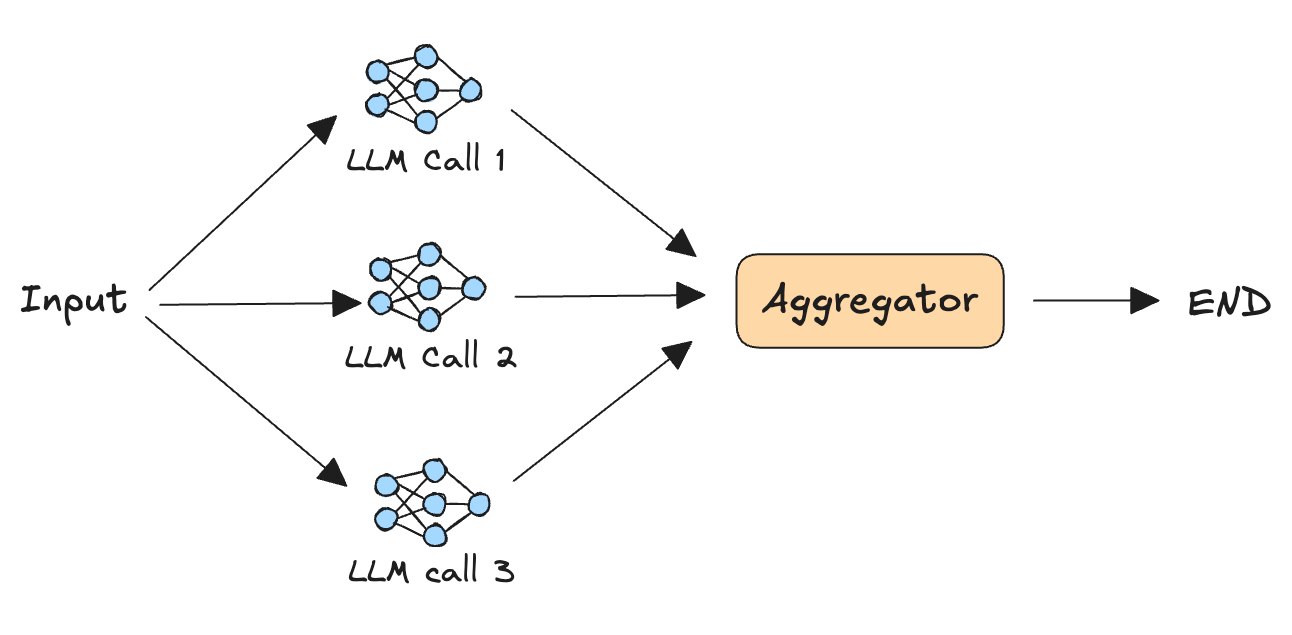

In this design pattern, several LLMs work simultaneously on the same task or subtasks of a complex task and their output is aggregated by another LLM or using some custom logic.

When to use

This pattern is useful for handling tasks that can be split into independent subtasks. Running these subtasks in parallel offers two key benefits: reduced overall latency and improved focus, as each LLM can concentrate on solving specific parts of the problem. In some cases, having multiple LLMs attempt the same task and comparing or combining their results can also improve reliability.

Use cases

-

LLM-as-a-judge: A common technique for evaluating LLM responses is using strong LLMs themselves. Using the parallelization design pattern, you can have multiple LLMs or a single LLM with different prompts simultaneously evaluating different aspects of the response.

-

Code generation: Given a prompt, use different LLMs to generate code. Then, execute each of the code snippets, use an LLM to analyze their efficiency, and return the most efficient one.

Reflect and critique

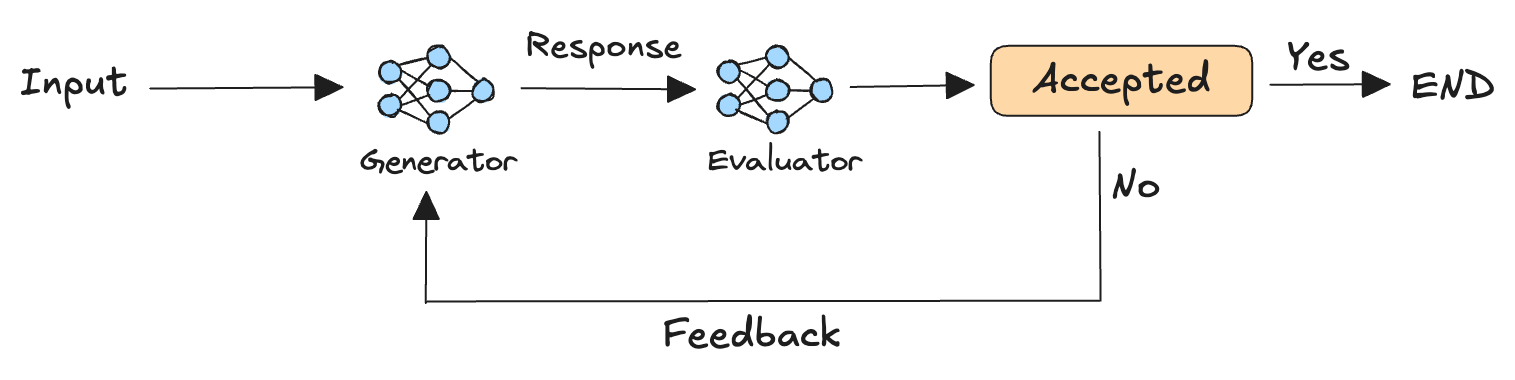

In this design pattern, one LLM (the generator) generates a response while a second LLM (the evaluator) reflects on it and provides critique or feedback. This process continues in a loop until the evaluator determines that the response is acceptable.

When to use

This pattern is effective for tasks where iterative refinement of LLM responses based on human-like feedback can yield more accurate, reliable, or cohesive results. Since LLMs are used as evaluators, the evaluation criteria should be clearly defined so that the LLM can effectively reason through them and provide human-like feedback.

Use cases

-

Report generation: Have the evaluator LLM check the generated report for adherence to style guides, inclusion of specific details, diagrams, and analyses, and make recommendations to improve it.

-

Code refinement: Given a prompt, the generator LLM writes code while the evaluator LLM acts as a code reviewer, suggesting ways to optimize the code.

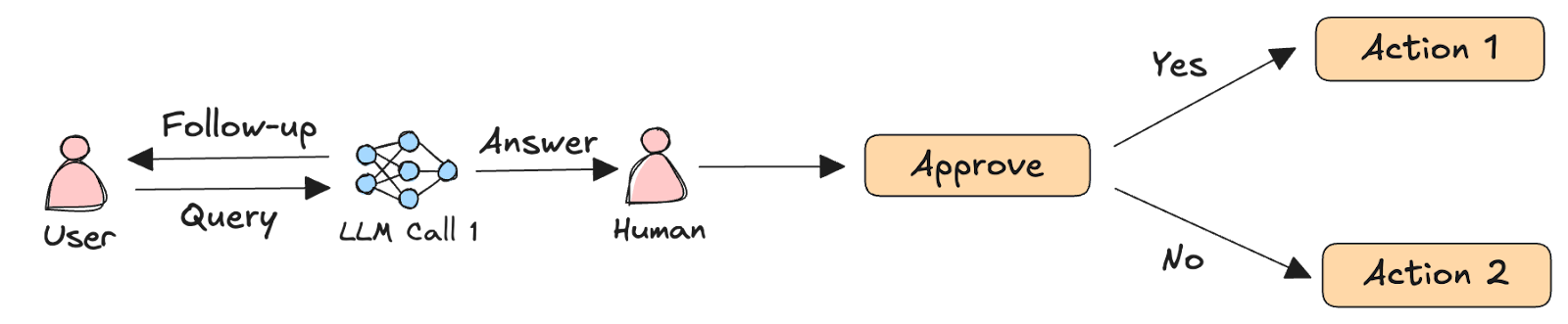

Human in the loop

This design pattern incorporates human input into an otherwise automated LLM-based pipeline. This enables LLMs to seek clarification and further details during multi-turn conversations while allowing humans to review, validate, edit, or override LLM outputs at critical points in the pipeline. This can significantly improve the application’s reliability, especially for sensitive tasks.

When to use

This pattern is particularly useful in scenarios where complete LLM-based automation is either not feasible due to the system’s fundamental requirements or undesirable due to the potential consequences of a bad outcome. Human input for clarifications and follow-ups, however, can benefit all LLM-based workflows since natural language can be ambiguous.

Use cases

-

Computer use: This technique uses LLMs to automate web-based tasks, but certain authentication steps (logins, 2FA, CAPTCHAs) inherently require human interaction and cannot be automated.

-

Health insurance claims processing: Use LLMs to analyze claims and provide detailed reasoning for approval or denial recommendations. However, human adjusters make final determinations after reviewing the analysis.

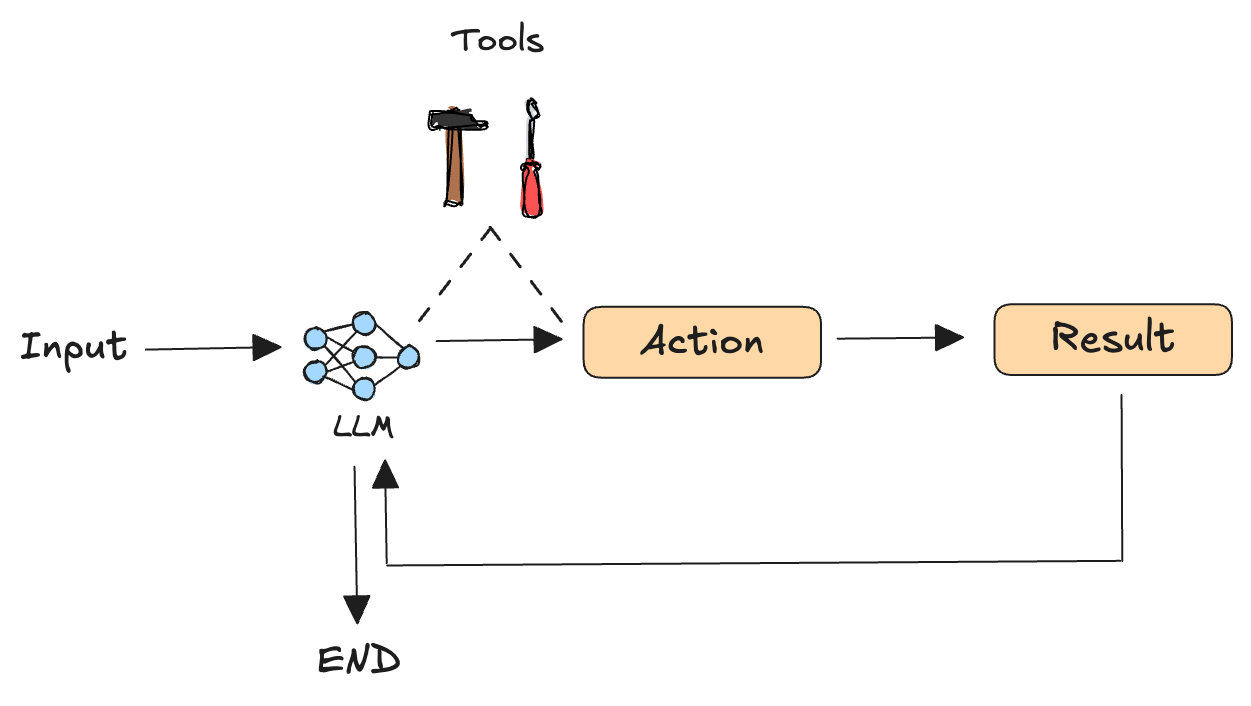

Agents

People typically refer to this design pattern when they say “AI agents.” The main difference between agents and the design patterns mentioned earlier is that agents use LLMs to determine the sequence of steps required to complete a particular task. They do this by taking actions with the help of tools and reasoning through the results of their previous actions to inform their next action. This makes agents extremely flexible and capable of handling a wide range of complex tasks, provided they have access to the right tools.

The diagram below shows a single-agent architecture, which consists of just one LLM with access to tools.

When to use

AI Agents are ideal for tasks that don’t necessarily have a structured workflow, have a high latency tolerance, and where non-deterministic outputs are acceptable.

Use cases

-

Adaptive learning: Use agents to analyze students’ learning patterns and performance to decide which topics to focus on and adjust difficulty levels.

-

Building software apps: Given a prompt, agents can iteratively generate, test, debug, and refine code to create fully functional software apps, including frontend, backend, database integrations, and authentication.

NOTE: You can combine certain aspects of other design patterns, such as human-in-the-loop and reflect-and-critique, with AI agents to make them more reliable.

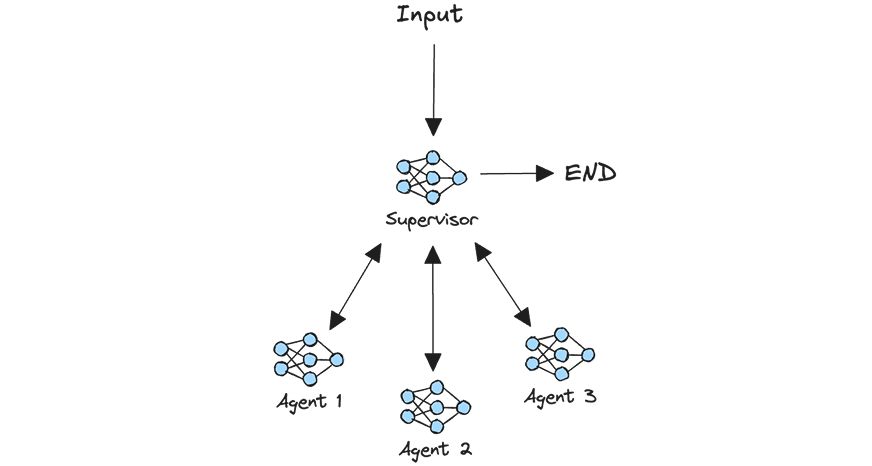

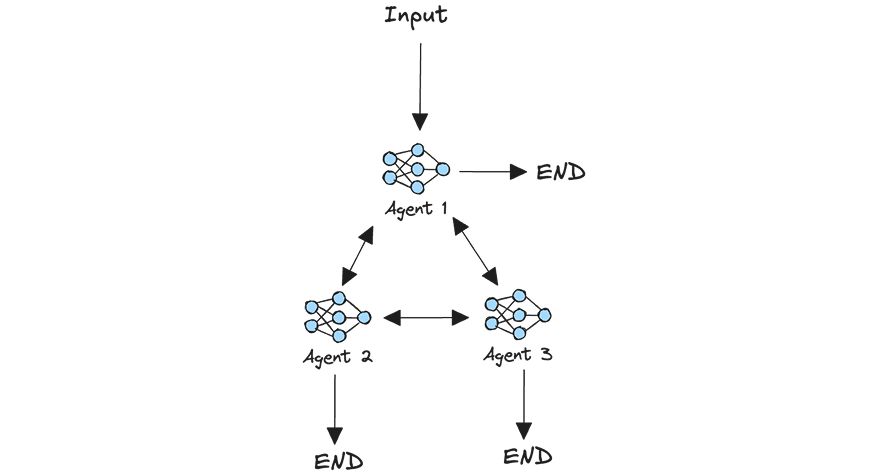

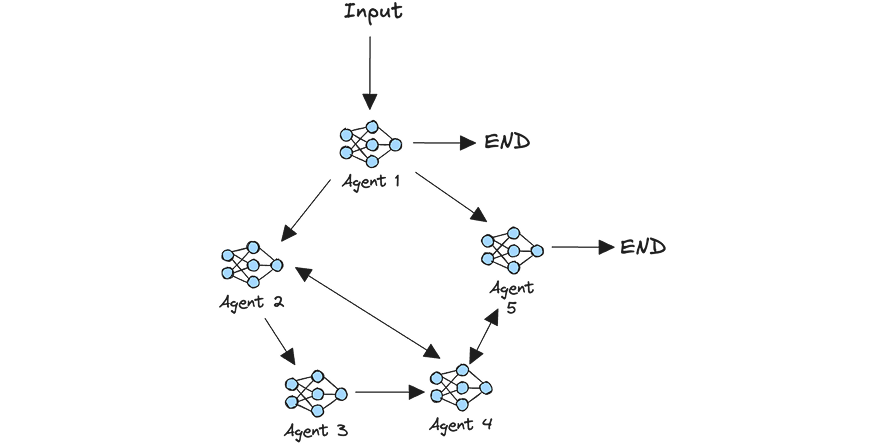

Multi-agent

This design pattern is the ultimate evolution of AI agents, where multiple agents work together to accomplish complex tasks. There are several ways to architect these systems depending on the needs of your application:

Supervisor: A single agent (supervisor) interfaces with a group of agents to determine the next course of action.

Network: Each agent can communicate with every other agent and decide which one to call next or end the execution.

Custom: In this custom setup, you decide which agents can interact with each other and how the control flows between them.

When to use

Multi-agent architectures can push the limits of LLM autonomy to explore open-ended, novel, or experimental tasks, the outcomes of which are hard to predict. For practical, real-world use cases, start with simpler design patterns, and if you must, use multi-agent architectures only for tasks where unpredictable outcomes are acceptable. Bear in mind that fully autonomous multi-agent workflows mean higher costs, latency, and a system that is hard to debug, so use them with caution.

Use cases

-

Scientific discovery: A team of AI agents collaborates to generate and test novel hypotheses in complex domains, with different agents specialized in literature review, experimental design, testing, and validation.

-

Multimedia storytelling: AI Agents with different artistic specialties work together to create dynamic storylines, characters, visuals, and music scores to explore new forms of interactive or generative storytelling.

Conclusion

In this article, we covered 7 agentic design patterns ranging from tightly controlled systems to highly autonomous ones that push the boundaries of what’s possible with LLMs. While new design patterns are emerging weekly, chasing the latest innovation isn’t always the best strategy. Instead, focus on your specific needs and technical requirements. Start with the simplest architecture that could work, carefully evaluate its performance, and add additional components only if there is clear evidence that they are needed.

New to building AI agents? Visit our AI Learning Hub and Generative AI Showcase GitHub repo for an extensive list of content and examples to get started.

What are some agentic design patterns that have worked well for you? Share them with us in our Generative AI community forums.