[[LangChain_Academy_-Introduction_to_LangGraph-_Motivation.pdf]]

State

- Define the State schema as the input schema for all Nodes and Edges.

- Use Python’s

TypedDictfor type hints:

from typing_extensions import TypedDict

class State(TypedDict):

graph_state: strNodes

- Nodes are Python functions. Each:

- Takes the state as input.

- Returns a new state with updated values.

- Example:

def node_1(state):

print("---Node 1---")

return {"graph_state": state['graph_state'] + " I am"}

def node_2(state):

print("---Node 2---")

return {"graph_state": state['graph_state'] + " happy!"}

def node_3(state):

print("---Node 3---")

return {"graph_state": state['graph_state'] + " sad!"}Edges

- Edges connect nodes.

- Normal Edges: Always follow a specific path.

- Conditional Edges: Route dynamically based on logic.

- Example Conditional Edge:

import random

from typing import Literal

def decide_mood(state) -> Literal["node_2", "node_3"]:

return "node_2" if random.random() < 0.5 else "node_3"Graph Construction

- Use the StateGraph class:

- Add nodes, edges, and compile for validation.

- Visualize with a Mermaid diagram.

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

# Build graph

builder = StateGraph(State)

builder.add_node("node_1", node_1)

builder.add_node("node_2", node_2)

builder.add_node("node_3", node_3)

# Logic

builder.add_edge(START, "node_1")

builder.add_conditional_edges("node_1", decide_mood)

builder.add_edge("node_2", END)

builder.add_edge("node_3", END)

# Compile

graph = builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))Graph Invocation

- Execute graphs using the runnable protocol.

- Example:

- Input:

{"graph_state": "Hi, this is Lance."} - Nodes process state sequentially until reaching

END.

- Input:

Messages

- Use

messagesfor conversation roles:- Types:

HumanMessage,AIMessage,SystemMessage,ToolMessage.

- Types:

- Example:

from langchain_core.messages import AIMessage, HumanMessage

messages = [AIMessage(content="What do you know about Orcas?", name="Model")]

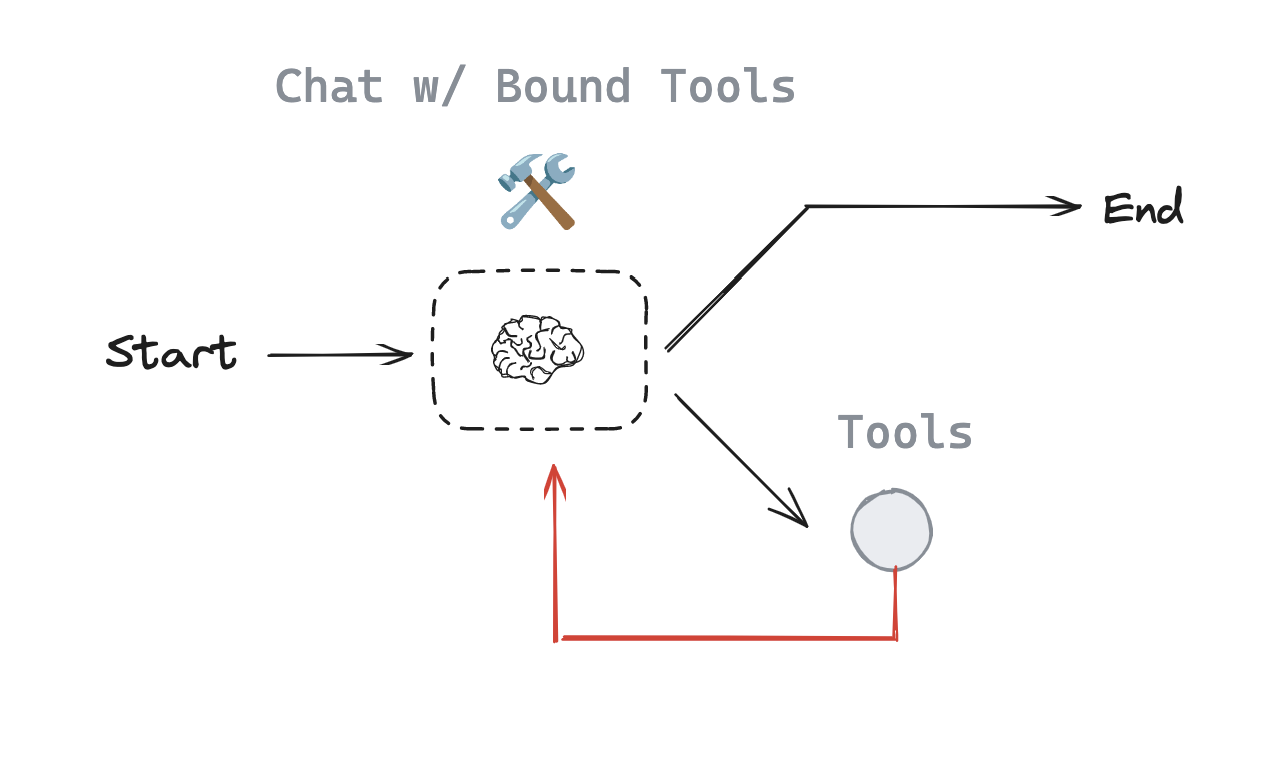

messages.append(HumanMessage(content="I want to learn about Orcas.", name="Lance"))Tools

- Tools enable models to interact with external systems.

- Bind Python functions to tools using

bind_tools:

def multiply(a: int, b: int) -> int:

return a * b

llm_with_tools = llm.bind_tools([multiply])- Integrate with ToolNode for tool execution.

ReAct Agent

- Extend with ReAct architecture:

- Act: Call tools.

- Observe: Receive tool outputs.

- Reason: Decide next actions.

Agent with Memory

- Use persistence for state memory:

MemorySavercheckpointing stores graph states.

from langgraph.checkpoint.memory import MemorySaver

memory = MemorySaver()

react_graph_memory = builder.compile(checkpointer=memory)- Access saved states with

thread_id.

Deployment

Concepts

- LangGraph: Python/JS library for agent workflows.

- LangGraph API: Manages state and tasks.

- LangGraph Cloud: Hosted service for graph deployment.

- LangGraph Studio: IDE for local and cloud testing.

- LangGraph SDK: Python library for programmatic graph interaction.

Testing Locally

- Connect with local graphs via Studio-provided URL:

from langgraph_sdk import get_client

URL = "http://localhost:56091"

client = get_client(url=URL)

assistants = await client.assistants.search()

thread = await client.threads.create()

input = {"messages": [HumanMessage(content="Multiply 3 by 2.")]}

async for chunk in client.runs.stream(thread['thread_id'], "agent", input=input, stream_mode="values"):

if chunk.data and chunk.event != "metadata":

print(chunk.data['messages'][-1])- Stream graph execution state with

stream_mode="values".

References

- Persistence: LangGraph Docs

- Streaming: Stream Values